This entry describes how I set up an environment on my laptop to learn Foreman. There are other ways to do this but this worked for me. My goal was to be able to use Foreman to provision VMs with no intervention beyond turning the VM on and using only VirtualBox as my hypervisor. I also wanted to be able to do provision CentOS systems with my laptop not connected to the Internet (e.g. suppose I am on an airplane). As a result of these goals there are a variety of network tweaks to set this up which required some reading of Chapter 6 of the VirtualBox manual.

Network Overview

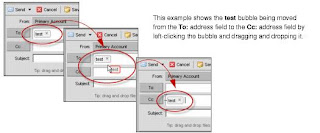

Foreman provisions systems using PXE, which requires DHCP and TFTP. Foreman can take care of TFTP but in this case I am running a separate machine for DHCP which is also acting as a router for the VMs inside my environment. I am calling this machine netman (short for network manager). I am using a couple of NAT hacks to make this work for the situations I will encounter; e.g. if Foreman tells a VM to pull a repo from the Internet, assuming dom0 is on the network. I am also keeping a local CentOS mirror for when not on the Internet. Here is a diagram of the network ranging from my laptop's wifi connection down into VirtualBox and then into the VMs.

Here is a list of systems and their configurations.

1. dom0 this my laptop running VirtualBox. It has two network devices:

* en0

** Wifi card which is DHCP'd some NAT'd address depending my location

** let's assumue 192.168.1.10x (RFC1918-C)

* vboxnet1

** This is how my laptop communicates with my VMs

** It is made by VirtualBox: VirtualBox > Preferences > Network > +

** In this case I specified 172.16.1.1/24 with no DHCP server

** I am using a subset of RFC1918-B for this network

2. netman this is my network manager VM. It has two devices:

* eth0:

** In vbox terms it is Adapter 1, running NAT to reach the Internet

** vbox DHCPs to eth0 from a subset of RFC1918-A with gateway 10.0.2.2

** /etc/sysconfig/network-scripts/eth0 is configured for DHCP

* eth1:

** In vbox terms it is Adapter 2, running Host-only Networking

** The vbox name it uses is vboxnet1

** /etc/sysconfig/network-scripts/eth1 is statically configured

*** 172.16.1.2

netman acts as a router and DHCP server to hosts within 172.16.1.0/24. It uses iptables masquerading to NAT for those hosts so their Internet bound packets can come in eth1 and go out eth0. It is true that eth0 was itself NAT'd by VirtualBox and might then go out wifi which itself was probably NAT'd. So we have some ugly NAT hacks within the three regions of RFC1918 space: B > A > C. It is ugly, but within my virtual box environment for learning, I found this sufficient to achieve my goals.

3. client this could be any VM within my environment

* eth0:

** In vbox terms it is Adapter 1, running Host-only Networking

** The vbox name it uses is vboxnet1

** /etc/sysconfig/network-scripts/eth1 is configured for DHCP

** IP Address is DHCP'd from within 172.16.1.3/24

** Gateway is DHCP'd to 172.16.1.2 (eth1 of netman)

** DNS handed out by DHCP server is google's 8.8.8.8, 8.8.4.4

** Static IPs are assigned by mac address

** dom0 can use it's vboxnet1 device (172.16.1.1) to SSH to client

Configure dom0

Download and install

VirtualBox. Virtual machines cannot PXE boot unless you install the extpack:

$ VBoxManage extpack install Oracle_VM_VirtualBox_Extension_Pack-4.2.18-88780.vbox-extpack

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Successfully installed "Oracle VM VirtualBox Extension Pack".

$

Create the vboxnet1 network: VirtualBox > Preferences > Network > +

Configure the IP 172.16.1.1 and netmask 255.255.255.0 and leave DHCP disabled since netman will be the DHCP server.

Configure netman

I keep a minimally installed RHEL6 VM within VirtualBox to clone, so I cloned that into netman.

Add two network devices

Configure Adapter 1 for NAT:

Configure Adapter 2 for Host-only networking using vboxnet1:

Start netman with virtualbox. As eth0 and eth1 try to come up you might see a message like:

Device eth0 does not seem to be present, delaying initialization

Edit /etc/sysconfig/network-scripts/ifcfg-eth{0,1} to set the correct MAC addresses and then run:

rm /etc/udev/rules.d/70-persistent-net.rules

udevadm trigger

Configure eth0 for DHCP:

[root@netman ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

BOOTPROTO=dhcp

HWADDR=08:00:27:20:C2:8E

[root@netman ~]#

Configure eth1 with a static address and to act as a router:

[root@netman ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

BOOTPROTO=none

PEERDNS=yes

HWADDR=08:00:27:62:E4:C1

TYPE=Ethernet

IPV6INIT=no

DEVICE=eth1

NETMASK=255.255.255.0

BROADCAST=""

IPADDR=172.16.1.2 # Gateway of the LAN

NETWORK=172.16.1.0

USERCTL=no

ONBOOT=yes

[root@netman ~]#

Configure iptables so it will NAT

# Flush the firewall

iptables -F

iptables -t nat -F

iptables -t mangle -F

iptables -X

iptables -t nat -X

iptables -t mangle -X

# Set up IP FORWARDing and Masquerading

iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

iptables -A FORWARD -i eth1 -j ACCEPT

# Enable packet forwarding by kernel

# You should save this setting in /etc/sysctl.conf too

echo 1 > /proc/sys/net/ipv4/ip_forward

# Apply the configuration

service iptables save

service iptables restart

Configure a DHCP server

This borrows from RedHat's guide.

Install the DHCP server:

yum install dhcp

Configure /etc/dhcp/dhcpd.conf with something like this

option domain-name "example.com";

option domain-name-servers 8.8.8.8, 8.8.4.4;

default-lease-time 600;

max-lease-time 7200;

log-facility local7;

subnet 172.16.1.0 netmask 255.255.255.0 {

range 172.16.1.13 172.16.1.23;

option routers 172.16.1.2;

}

host client {

hardware ethernet 08:00:27:59:59:33;

fixed-address 172.16.1.3;

}

A few things of note:

- I'm using Google's Public DNS servers since it works well everywhere I have been and is sufficient for a test environment.

- I have a range of IPs I will hand out between 172.16.1.13 and 172.16.1.23;

- Hosts that I provision are also getting static assignments by MAC address; e.g. I have an entry for the MAC address of "client" which I will set up next.

Start dhcpd and configure it to start on boot.

/etc/init.d/dhcpd start

chkconfig dhcpd on

Configure a static CentOS Mirror

netman will serve this mirror over HTTP so install Apache.

yum install httpd

service httpd start

chkconfig httpd on

Next we will get content to serve the mirror.

- Download an

CentOS

ISO file and store it somewhere on dom0.

- Within Virtualbox's entry for netman go to Settings > Storage

and then add a virtual IDE device which is the ISO file.

Next we will mount the ISO file under the web tree. To do this I made

a directory:

mkdir -p /var/www/html/6.4/os/x86_64

and then put the following in my /etc/fstab:

/dev/cdrom1 /var/www/html/6.4/os/x86_64 iso9660 ro 0 0

After a "mount -a", I was then able to point a browser at http://172.16.1.2/6.4/os/x86_64/ and see what I expected to see. The mirror of a static ISO might get out of date but will get the system booted if it doesn't have Internet access and I could yum upgrade it later.

Configure Client

I am showing the network configuration as it would apply to a generic RHEL6 or CentOS6 box. Later we will let Foreman provision these configurations.

Set up a basic RHEL6 box (perhaps from a clone) and configure Adapter 1 as a Host-only Adapter using the vboxnet1 network. Boot the system and configure configure eth0 for DHCP:

[root@client ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

BOOTPROTO=dhcp

HWADDR=08:00:27:59:59:33

[root@client ~]#

Restart networking and "tail -f /var/log/messages" on netman to see your client boot and get an address from your DHCP server.

Oct 18 18:00:41 netman dhcpd: DHCPREQUEST for 172.16.1.3 from 08:00:27:59:59:33 via eth1

Oct 18 18:00:41 netman dhcpd: DHCPACK on 172.16.1.3 to 08:00:27:59:59:33 via eth1

If you can then SSH into the client and resolve Internet hosts from the client, then you have a working network configuration and can move on to configuring Foreman.

Set up some form of DNS (even a hack)

Until I set up a DNS server in my environment my /etc/hosts file on all my systems for this environment contain the following:

172.16.1.1 laptop laptop.example.com

172.16.1.2 netman netman.example.com

172.16.1.3 client client.example.com

172.16.1.4 puppet puppet.example.com

172.16.1.4 foreman foreman.example.com

172.16.1.5 unattended unattended.example.com

Note that, we will configue 172.16.1.4 next and that it will run Puppet and Foreman. At the end we'll configure unattended which will be an unattended install.

Build a host to run Puppet/Foreman

Next I will configure a server running Puppet and Foreman. I will start with a RHEL6 host configured on the network as described above with the generic client. I called my host puppet. Remember to create an entry in netman for DHCP in dhcpd.conf:

host puppet {

hardware ethernet 08:00:27:B9:B3:E8;

fixed-address 172.16.1.4;

}

If you booted this host and got a DHCP'd address from the range being handed out and want to static it as described above, then you can revert the lease file as described below.

cd /var/lib/dhcpd/

mv dhcpd.leases~ dhcpd.leases

service dhcpd restart

Once you have your a booting vanilla RHEL6 box you can install Puppet and Foreman.

First install EPEL

wget https://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

yum localinstall epel-release-6-8.noarch.rpm

Install Puppet from their repos. To do this I added the following to /etc/yum.repos.d/puppet.repo

[Puppet]

name=Puppet

baseurl=http://yum.puppetlabs.com/el/6Server/products/x86_64/

gpgcheck=0

[Puppet_Deps]

name=Puppet Dependencies

baseurl=http://yum.puppetlabs.com/el/6Server/dependencies/x86_64/

gpgcheck=0

Install Puppet:

yum -y install puppet facter puppet-server

Then run the Foreman Installer

yum -y install http://yum.theforeman.org/releases/1.3/el6/x86_64/foreman-release.rpm

yum -y install foreman-installer

Answer yes to all defaults. Near the end you should see something like:

...

Notice: Finished catalog run in 294.38 seconds

Okay, you're all set! Check

/usr/share/foreman-installer/foreman_installer/answers.yaml for your

config.

You can apply it in the future as root with:

echo include foreman_installer | puppet apply --modulepath

/usr/share/foreman-installer -v

#

Once Foreman is running you should be able to login to its web interface at https://puppet.example.com. Read the documentation on what the default username/password is. Once you're in, configure Foreman with a smartproxy.

GUI > More > Configuration > Smart Proxies

Make sure the FQDN in /etc/hosts is consistent with the FQDN as defined in /etc/foreman-proxy/settings.yml.

Configure a client to be managed by Puppet

As described in the client section above, set up a basic RHEL6 box (perhaps from a clone) and configure Adapter 1 as a Host-only Adapter using the vboxnet1 network. Take note of its mac address so you can put an entry in netman's DHCP server. Boot the system and configure configure eth0 for DHCP.

First install EPEL

wget https://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

yum localinstall epel-release-6-8.noarch.rpm

Install Puppet from their repos. To do this I added the following

to /etc/yum.repos.d/puppet.repo

[Puppet]

name=Puppet

baseurl=http://yum.puppetlabs.com/el/6Server/products/x86_64/

gpgcheck=0

[Puppet_Deps]

name=Puppet Dependencies

baseurl=http://yum.puppetlabs.com/el/6Server/dependencies/x86_64/

gpgcheck=0

Install ruby, puppet, and facter:

yum install ruby ruby-libs puppet facter

Now that puppet, the agent, should be installed, add "server=puppet.example.com" to /etc/puppet/puppet.conf. Then run "puppet agent --server=puppet.example.com --no-daemonize --verbose" and observe:

[root@puppet-agent ~]# puppet agent --server=puppet.example.com --no-daemonize --verbose

info: Creating a new SSL key for puppet-agent.example.com

info: Caching certificate for ca

info: Creating a new SSL certificate request for puppet-agent.example.com

info: Certificate Request fingerprint (md5): EA:5E:82:B8:A8:BA:8D:7E:5B:3D:20:2C:68:06:B0:04

...

notice: Did not receive certificate

...

The above sends a cert to be signed by the master. It will check every two minutes if there is a new cert.

Back on puppet.example.com, sign the certificate:

[root@puppet ~]# puppet cert --list

"puppet-agent.example.com" (EA:5E:82:B8:A8:BA:8D:7E:5B:3D:20:2C:68:06:B0:04)

[root@puppet ~]#

[root@puppet-master public_keys]# puppet cert --sign puppet-agent.example.com

notice: Signed certificate request for puppet-agent.example.com

notice: Removing file Puppet::SSL::CertificateRequest puppet-agent.example.com at '/var/lib/puppet/ssl/ca/requests/puppet-agent.example.com.pem'

[root@puppet-master public_keys]#

Then on puppet-agent continue to observe the output of "puppet agent --server=puppet.example.com --no-daemonize --verbose". You should see news of the cert's acceptance:

...

info: Caching certificate for puppet-agent.example.com

notice: Starting Puppet client version 2.6.18

info: Caching certificate_revocation_list for ca

info: Caching catalog for puppet-agent.example.com

info: Applying configuration version '1369603392'

info: Creating state file /var/lib/puppet/state/state.yaml

notice: Finished catalog run in 0.01 seconds

^c

Now run the puppet agent as a daemon and configure it to run on boot:

[root@puppet-agent ~]# service puppet start

Starting puppet: [ OK ]

[root@puppet-agent ~]# chkconfig puppet on

[root@puppet-agent ~]#

You can see how your agent is doing anytime:

[root@puppet-agent ~]# puppet agent --test

Info: Retrieving plugin

Info: Caching catalog for puppet-agent.example.com

Info: Applying configuration version '1383503512'

Notice: Finished catalog run in 0.03 seconds

[root@puppet-agent ~]#

Now that your host is in puppet, you should be able to see it in the Foreman web GUI under Hosts.

Install a module from the puppet forge and push it to puppet-agent

On the puppet master, install jeffmccune/motd.

[root@puppet ~]# cd /usr/share/puppet/modules

[root@puppet modules]# puppet module install jeffmccune/motd

Installed "jeffmccune-motd-1.0.3" into directory: motd

[root@puppet modules]# ls

motd

[root@puppet modules]#

On the same puppet master, define $puppetserver and node list in /etc/puppet/manifests/site.pp

import 'nodes.pp'

$pupppetserver = 'puppet.example.com'

Define the nodes in /etc/puppet/manifests/nodes.pp:

node 'puppet-agent.example.com' {

include motd

}

In the above case I am making a place for my puppet-agent node and asking that the motd module be on the agent.

On the agent, wait the default amount of time (30 minutes) or reload puppet.

[root@puppet-agent ~]# service puppet reload

Restarting puppet: [ OK ]

[root@puppet-agent ~]# tail -f /var/log/messages

May 26 19:50:24 puppet-agent puppet-agent[13924]: Restarting with '/usr/sbin/puppetd '

May 26 19:50:25 puppet-agent puppet-agent[14060]: Reopening log files

May 26 19:50:25 puppet-agent puppet-agent[14060]: Starting Puppet client version 2.6.18

May 26 19:50:28 puppet-agent puppet-agent[14060]: (/File[/etc/motd]/content) content changed

'{md5}d41d8cd98f00b204e9800998ecf8427e' to '{md5}1b48863bff7665a65dda7ac9f57a2e8c'

May 26 19:50:28 puppet-agent puppet-agent[14060]: Finished catalog run in 0.02 seconds

Now if I SSH into the agent, I see my new MOTD.

$ ssh puppet-agent -l root

Last login: Sun Nov 3 13:44:16 2013 from dom0

-------------------------------------------------

Welcome to the host named puppet-agent

RedHat 6.4 x86_64

-------------------------------------------------

Puppet: 3.3.0

Facter: 1.7.3

FQDN: puppet-agent.example.com

IP: 172.16.1.6

Processor: Intel(R) Core(TM) i7-3667U CPU @ 2.00GHz

Memory: 490.63 MB

-------------------------------------------------

[root@puppet-agent ~]$

So far we have verified that puppet-agent is able to be managed from Puppet directly after editing nodes.pp on the Puppet server. If we were to now tell Foreman to manage this host and apply the same motd puppet module then we'd see an error. For example, in the GUI under hosts select the puppet-agent host and then:

Edit > Manage Host > Puppet Classes> motd > Sumbit

While under Edit select "Manage host". If you were to then re-run puppet you'd see:

[root@puppet-agent ~]# puppet agent --test

Info: Retrieving plugin

Error: Could not retrieve catalog from remote server:

Error 400 on SERVER: Duplicate declaration: Class[Motd]

is already declared; cannot redeclare on node puppet-agent.example.com

Warning: Not using cache on failed catalog

Error: Could not retrieve catalog; skipping run

[root@puppet-agent ~]#

My goal is to have Foreman manage my host and Foreman maintains its list of hosts to manage by Puppet's

ENC API. So rather than edit my nodes.pp on my Puppet server directly to tell Puppet which modules to apply to my hosts, I will remove the entry for motd so that my nodes.pp looks like the following:

node 'puppet.example.com' {

}

node 'puppet-agent.example.com' {

}

and then re-run my puppet test: 3

[root@puppet-agent ~]# puppet agent --test

Info: Retrieving plugin

Info: Caching catalog for puppet-agent.example.com

Info: Applying configuration version '1383513022'

Notice: Finished catalog run in 0.03 seconds

[root@puppet-agent ~]#

The above works because I had told foreman to apply that motd module.

I can test this further by installing the

puppetlabs/ntp module.

[root@puppet ~]# cd /usr/share/puppet/modules

[root@puppet modules]# ls

[root@puppet modules]# puppet module install puppetlabs/ntp -i common

Notice: Preparing to install into /etc/puppet/modules/common ...

Notice: Created target directory /etc/puppet/modules/common

Notice: Downloading from https://forge.puppetlabs.com ...

Notice: Installing -- do not interrupt ...

/etc/puppet/modules/common

└─┬ puppetlabs-ntp (v2.0.0-rc1)

└── puppetlabs-stdlib (v4.1.0)

[root@puppet modules]#

From there I can have the Foreman GUI tell puppet-agent to use that module.

More > Configuration > Puppet Classes > Import from $host > Update

I now see my class name of "ntp" with 19 keys. I click ntp and I could over ride settings; e.g. add the address of my NTP server. To apply that module to my NTP server I can use the Foreman GUI with:

Hosts > select your host > Edit > Puppet Classes

Then select one of the available classes like NTP and make it an included class and click submit. After you click submit you can have your host check in:

[root@puppet-agent ~]# puppet agent --test

Info: Retrieving plugin

Info: Caching catalog for puppet-agent.example.com

Info: Applying configuration version '1383513022'

Notice: /Stage[main]/Ntp/Service[ntpd]/ensure: ensure changed 'stopped' to 'running'

Info: /Stage[main]/Ntp/Service[ntpd]: Unscheduling refresh on Service[ntpd]

Notice: Finished catalog run in 1.47 seconds

[root@puppet-agent ~]#

You can then view your host and see a report about it. If you click the yaml button, then you can see the config file that was applied to that host.

Configure a VM to be provisioned by Foreman

We'll now configure a VM which can be defined in virtualbox, given a DHCP reservation, defined in Foreman, such that the act of then turning the VM on alone, should result in that VM being PXE booted, having the OS installed and having a listening puppet agent which will apply all configurations you deem appropriate for the host.

Assuming you have the environment described above you should be able to set up something similar to what you see in Dominic Cleal's Foreman Quickstart: unattended installation screen cast.

Create a new virtual machine called "unattended" in virtualbox and configure Adapter 1 as a Host-only Adapter using the vboxnet1 network. Take note of its mac address so you can put an entry in netman's DHCP server. This host's DHCP entry should also contain the IP of the TFTP server as well as the file to request from the TFTP server that it can use to boot.

host unattended {

hardware ethernet 08:00:27:3c:b0:a4;

fixed-address 172.16.1.5;

next-server 172.16.1.4;

filename "pxelinux.0";

}

Next we have to define the host in Foreman so that it can configure it. First we need to define what components Foreman has to define that host starting with an operating system in the Foreman GUI:

More > Provisioning > Operating Systems

Define a CentOS 6.4 OS. Then set up a Provisioning Template:

More > Provisioning > Provisioning Templates

Select "PXE Default" and edit the template to point to the local mirror we set up on netman's IP address:

"url --url http://172.16.1.2/6.4/os/x86_64/"

Looking over the script more you will see that the default is configured to install Puppet using EPEL. This is not as current as Puppet's repository but that can be fixed later. Under the Association tab, tick CentOS 6.4 and click Save.

Select "PXE Default PXELinux" and edit the Template so that the last five lines look as follows with a reference to the provisioning template on the Puppet server and click Save.

<% if @host.operatingsystem.name == "Fedora" and @host.operatingsystem.major.to_i > 16 -%>

append initrd=<%= @initrd %> ks=http://172.16.1.4/unattended/provision ks.device=bootif network ks.sendmac

<% else -%>

append initrd=<%= @initrd %> ks=http://172.16.1.4/unattended/provision ksdevice=bootif network kssendmac

<% end -%>

Under Association, assoctiate this template with CentOS 6.4 and click Save.

Associate a partition table with your CentOS 6.4 OS by selecting:

More > Provisioning > Provisioning Templates

and then selecting an Operating System Family as RedHat and then clicking Submit.

Define an operating system mirror by selecting:

More > Provisioning > Installation Media > CentOS Mirror

and then edit as follows:

Name: CentOS mirror

Path: http://172.16.1.2/$major.$minor/os/$arch/

Operating System Family: RedHat

Associate this mirror with the OS.

More > Provisioning > Operating Systems > CentOS 6.4

Tick the Architecture, Partition tables, Installation Media. The number the Templates file, set the Provision to "Kickstart Default" and PXELinux to "Kickstart Default PXELinux" and click Submit.

Define a subnet to build the host within Foreman. In this case I am going to enter the criteria of vboxnet1. From the Foreman GUI:

More > Provisioning > Subnets > New Subnet

Then define the subnet as follows:

Name: quickstart

Network Address: 172.16.1.0

Netmask: 255.255.255.0

Domain: example.com

TFTP Proxy: puppet

Leave the other fields blank and click Submit.

Define the host itself within the Foreman GUI:

Hosts > New Host

Then define the host as follows:

Host Tab:

Name: unattended

Deploy on: Bare Metal

Environment: Production

Puppet CA: puppet

Puppet Master: puppet

Operating System:

Architecture: x86_64

Operating System: CentOS 6.4

Media: CentOS Mirror

Partition Table: RedHat Default

You will also need to fill in the items under the Network tab based on the entries you made for DHCP above. Finally click Submit to enter the host into Foreman's database and have Foreman contact the TFTP server smartproxy so that the smartproxy will load the TFTP server with the desired images to build this host.

Next you can check that the TFTP server entries were created properly. On the puppet master, /var/lib/tftpboot/pxelinux.cfg should contain a file whose name is the same as the mac address of unattended.example.com and /var/lib/tftpboot/pxelinux.cfg/boot should contain CentOS-6.4-x86_64-initrd.img and CentOS-6.4-x86_64-vmlinuz, which can be used to boot.

Now that you have defined the host in Foreman, test the TFTP server.

[root@netman ~]# tftp puppet

tftp> get pxelinux.0

tftp> quit

[root@netman ~]# ls -lh pxelinux.0

-rw-rw-r--. 1 root root 27K Oct 19 13:46 pxelinux.0

[root@netman ~]#

You should then be able to turn the host on and watch it PXE boot.

If you have trouble getting the host PXE booting see the Unattended Provisioning Troubleshooting flow chart or the Unattended Provisioning wiki entry.

Update 11/16/13: I forgot to mention to make sure you edit /etc/sudoers:

foreman-proxy ALL = NOPASSWD: /usr/bin/puppet cert *

Defaults:foreman-proxy !requiretty

and that /var/log/foreman-proxy/proxy.log is very helpful when debugging.